What is Explainable AI (XAI)?

Explainable Artificial Intelligence (XAI), through its framework and set of tools, helps developers and organizations provide a layer of transparency in a specific AI model so that users can understand the logic behind the prediction. XAI allows users to get an idea of how a complex machine learning algorithm works and what logic drives those models’ decision-making.

As more and more companies incorporate artificial intelligence and advanced analytics into their business processes and automate decisions, it becomes necessary to have some transparency to understand how these models are making such significant and impactful decisions. Explainable AI is growing because the demand from businesses is increasing, as it helps them interpret and explain AI and machine learning (ML) models.

Explainable AI answers the critical question of “How is AI making decisions?” Major technology giants like Google and Microsoft have already launched their own resources and explainable AI tools.

Why Is Explainability So Important?

AI has the power to automate decisions, and those decisions have business impacts, both positive and negative, on both large and small scales. While AI is growing and becoming more widely used, a fundamental problem remains: people don’t understand why AIs make their own decisions, not even the developers who create them.

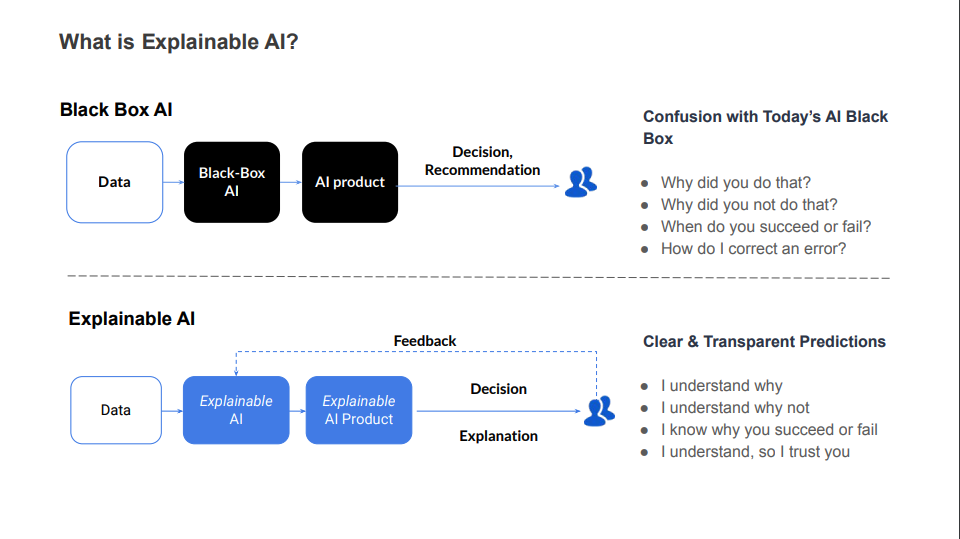

AI algorithms are sometimes like black boxes; that is, they take inputs and provide outputs with no way to understand their inner workings. Developers and users ask themselves questions such as: How do these models reach certain conclusions? What data do they use? Are the results reliable?

Unlike black-box AI models, which often function as opaque decision-making systems, explainable AI aims to provide information about the model’s reasoning and decision-making process. The architecture in the following image represents the typical structure of an explainable AI system.

Many organizations want to leverage AI but are not comfortable letting the model make more impactful decisions because they still do not trust the model. Explainability is critical here, as it provides insight into how models make decisions, thereby increasing their reliability and making it possible to improve performance. For this reason, the demand for XAI is growing rapidly.

The AI field is constantly developing, and we are currently witnessing significant growth. However, the new models are becoming more and more challenging to understand and monitor, which makes confidence low. XAI plays an important role here, providing valuable information about how these models work. According to an IBM study, users of its XAI platform achieved a 15% to 30% increase in model accuracy.

The Principles of Explainable Artificial Intelligence

The AI community actively seeks explainability as one of the many desirable characteristics of reliable AI systems. The National Institute of Standards (NIST), part of the U.S. Department of Commerce, defined the four principles of explainable artificial intelligence:

- Explanation: Systems deliver complementary evidence or reasons for all outcomes.

- Meaningful: Systems provide explanations that are understandable to individual users.

- Explanation Accuracy: The explanation correctly reflects the system’s output-generating process.

- Knowledge limits: The system only works under the conditions for which it was designed or when the system achieves enough confidence in its output.

In other words, an AI system must give evidence, support, and reasoning for each output, provide explanations understandable by users, and accurately reflect the process to reach the outcome. Furthermore, it should only operate under the conditions in which it was designed and not give results if it does not have sufficient confidence.

Challenges of Explainable AI

Explainable AI is currently growing, and it is a relatively new area, so it still faces many challenges at the moment. Here are some of its most relevant challenges.

One of the primary challenges of explainable AI is finding the right balance between model accuracy and explainability. Explainable AI systems may not achieve the same level of accuracy as non-explainable or black box models. Striking a balance between accuracy and explainability remains an ongoing challenge in the field.

While the goal of explainable AI is to generate explanations that are both accurate and understandable, this objective is not always guaranteed. Generating accurate explanations that effectively convey the underlying decision-making process can be complex.

Explainable AI models can be more difficult to train and fine-tune compared to non-explainable machine learning models. Furthermore, they can be more challenging to implement because they need human intervention at some point. Depending on its training and the model, the algorithm may learn biased visions of the world or unbiased ones.

The perception of fairness in AI systems is highly contextual and dependent on the inputs provided to the machine learning algorithms. The potential for AI systems to make incorrect decisions exists, especially when the input data is biased, incomplete, or lacking relevant context.

Determining the trustworthiness of an AI system’s conclusions can be difficult without evaluating the underlying process by which it arrived at those conclusions.

How can we overcome XAI challenges?

To overcome these challenges, ongoing research and collaboration between researchers, developers, and policymakers are essential.

This collaboration will lead to the development of robust methodologies, guidelines, and standards that ensure explainable AI systems strike the right balance between accuracy, explainability, and fairness. By addressing these challenges head-on, we can create a future where AI systems are not only powerful but also transparent, trustworthy, and accountable, driving positive impact and benefiting businesses worldwide.

Conclusion

Explainable AI becomes a key factor in AI-based systems when it comes to meeting the demands for understandable, transparent, and reliable AI-based solutions. As artificial intelligence continues to play a pivotal role in decision-making across various industries, such as healthcare and businesses, its impact on society and organizations becomes increasingly significant.

We must rely on AI in order to benefit from its possibilities, but to do so, we must first solve challenges and work together to meet the requirements for models to evolve and become more reliable.

XAI empowers us to understand how AI arrives at its conclusions, shedding light on the reasoning and providing us with insights that facilitate informed decision-making. As humans, we are much more likely to trust AI and feel comfortable if we can understand the way it works and makes decisions.